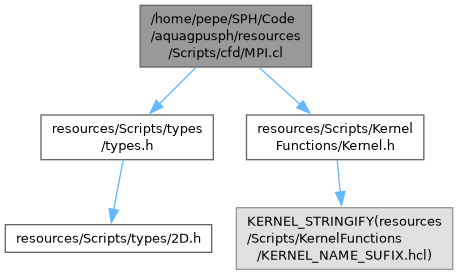

MPI syncing point. More...

Macros | |

| #define | __CLEARY__ 8.f |

| #define | _SHEPARD_ shepard[i] |

| #define | _GRADP_ grad_p[i].XYZ |

| #define | _LAPU_ lap_u[i].XYZ |

| #define | _DIVU_ div_u[i] |

Functions | |

| __kernel void | copy (__global unsigned int *mpi_iset, __global vec *mpi_r, __global vec *mpi_u, __global vec *mpi_dudt, __global float *mpi_rho, __global float *mpi_drhodt, __global float *mpi_m, const __global unsigned int *iset, const __global vec *r, const __global vec *u, const __global vec *dudt, const __global float *rho, const __global float *drhodt, const __global float *m, usize N) |

| Copy the data. | |

| __kernel void | append (__global unsigned int *iset, __global vec *r, __global vec *u, __global vec *dudt, __global float *rho, __global float *drhodt, __global float *m, __global int *imove, const __global usize *mpi_local_mask, const __global unsigned int *mpi_iset, const __global vec *mpi_r, const __global vec *mpi_u, const __global vec *mpi_dudt, const __global float *mpi_rho, const __global float *mpi_drhodt, const __global float *mpi_m, unsigned int mpi_rank, usize nbuffer, usize N) |

| Append the particles received from other processes. | |

| __kernel void | remove (__global int *imove, __global vec *r, __global vec *u, __global vec *dudt, __global float *m, const __global usize *mpi_local_mask, unsigned int mpi_rank, vec domain_max, usize N) |

| Remove the particles sent to other processes. | |

| __kernel void | backup_r (const __global usize *mpi_neigh_mask, const __global vec *mpi_r, __global vec *mpi_r_in, vec r_max, unsigned int mpi_rank, usize N, usize n_radix) |

| Backup the position of the MPI particles to sort that later. | |

| __kernel void | sort (const __global unsigned int *mpi_iset_in, __global unsigned int *mpi_iset, const __global vec *mpi_r_in, __global vec *mpi_r, const __global vec *mpi_u_in, __global vec *mpi_u, const __global float *mpi_rho_in, __global float *mpi_rho, const __global float *mpi_m_in, __global float *mpi_m, const __global usize *mpi_id_sorted, usize N) |

| Sort all the particle variables by the cell indexes. | |

| __kernel void | eos (__global unsigned int *mpi_iset, __global float *mpi_rho, __global float *mpi_p, __constant float *refd, usize N, float cs, float p0) |

| Stiff Equation Of State (EOS) computation. | |

| __kernel void | gamma (const __global int *imove, const __global vec *r, const __global float *rho, const __global float *m, const __global vec *mpi_r, const __global float *mpi_rho, const __global float *mpi_m, __global float *shepard, usize N, LINKLIST_REMOTE_PARAMS) |

| Shepard factor computation. | |

| __kernel void | interactions (const __global int *imove, const __global vec *r, const __global vec *u, const __global float *rho, const __global float *p, const __global vec *mpi_r, const __global vec *mpi_u, const __global float *mpi_rho, const __global float *mpi_p, const __global float *mpi_m, __global vec *grad_p, __global vec *lap_u, __global float *div_u, usize N, LINKLIST_REMOTE_PARAMS) |

| Fluid particles interactions with the neighbours from other processes. | |

Detailed Description

MPI syncing point.

Macro Definition Documentation

◆ __CLEARY__

| #define __CLEARY__ 8.f |

◆ _DIVU_

| #define _DIVU_ div_u[i] |

◆ _GRADP_

| #define _GRADP_ grad_p[i].XYZ |

◆ _LAPU_

| #define _LAPU_ lap_u[i].XYZ |

◆ _SHEPARD_

| #define _SHEPARD_ shepard[i] |

Function Documentation

◆ append()

| __kernel void append | ( | __global unsigned int * | iset, |

| __global vec * | r, | ||

| __global vec * | u, | ||

| __global vec * | dudt, | ||

| __global float * | rho, | ||

| __global float * | drhodt, | ||

| __global float * | m, | ||

| __global int * | imove, | ||

| const __global usize * | mpi_local_mask, | ||

| const __global unsigned int * | mpi_iset, | ||

| const __global vec * | mpi_r, | ||

| const __global vec * | mpi_u, | ||

| const __global vec * | mpi_dudt, | ||

| const __global float * | mpi_rho, | ||

| const __global float * | mpi_drhodt, | ||

| const __global float * | mpi_m, | ||

| unsigned int | mpi_rank, | ||

| usize | nbuffer, | ||

| usize | N ) |

Append the particles received from other processes.

The restored particles has always imove=0 flag

- Parameters

-

imove Moving flags - imove > 0 for regular fluid particles

- imove = 0 for sensors

- imove < 0 for boundary elements/particles

r_in Position \( \mathbf{r} \) u_in Velocity \( \mathbf{u} \) dudt_in Velocity rate of change \( \frac{d \mathbf{u}}{d t} \) rho_in Density \( \rho \) drhodt_in Density rate of change \( \frac{d \rho}{d t} \) m Mass \( m \) mpi_local_mask Incoming processes mask mpi_r Position \( \mathbf{r} \) MPI copy mpi_u Velocity \( \mathbf{u} \) MPI copy mpi_dudt Velocity rate of change \( \frac{d \mathbf{u}}{d t} \) MPI copy mpi_rho Density \( \rho \) MPI copy mpi_drhodt Density rate of change \( \frac{d \rho}{d t} \) MPI copy mpi_m Mass \( m \) MPI copy mpi_rank MPI process index nbuffer Number of buffer particles N Number of particles

◆ backup_r()

| __kernel void backup_r | ( | const __global usize * | mpi_neigh_mask, |

| const __global vec * | mpi_r, | ||

| __global vec * | mpi_r_in, | ||

| vec | r_max, | ||

| unsigned int | mpi_rank, | ||

| usize | N, | ||

| usize | n_radix ) |

Backup the position of the MPI particles to sort that later.

Just the particles coming from other processes are backuped

- Parameters

-

mpi_neigh_mask Incoming processes mask mpi_r Position \( \mathbf{r} \) MPI copy mpi_r_in Position \( \mathbf{r} \) MPI copy r_max The maximum position detected by the Link-List mpi_rank The current process id N Number of particles n_radix Number of elements on mpi_r_in

◆ copy()

| __kernel void copy | ( | __global unsigned int * | mpi_iset, |

| __global vec * | mpi_r, | ||

| __global vec * | mpi_u, | ||

| __global vec * | mpi_dudt, | ||

| __global float * | mpi_rho, | ||

| __global float * | mpi_drhodt, | ||

| __global float * | mpi_m, | ||

| const __global unsigned int * | iset, | ||

| const __global vec * | r, | ||

| const __global vec * | u, | ||

| const __global vec * | dudt, | ||

| const __global float * | rho, | ||

| const __global float * | drhodt, | ||

| const __global float * | m, | ||

| usize | N ) |

Copy the data.

To make the operation as asynchronous as possible, we are copying all the available data, regardless it is useful or not. That is a bit computationally less efficient, but allows to start transfering data ASAP, while we still operate to neglect the particles

It is intended that the fields copied here are the same subsequently exchanged later with the mpi-sync tool

- Parameters

-

mpi_iset Particles set MPI copy mpi_r Position \( \mathbf{r} \) MPI copy mpi_u Velocity \( \mathbf{u} \) MPI copy mpi_dudt Velocity rate of change \( \frac{d \mathbf{u}}{d t} \) MPI copy mpi_rho Density \( \rho \) MPI copy mpi_drhodt Density rate of change \( \frac{d \rho}{d t} \) MPI copy mpi_m Mass \( m \) MPI copy iset Particles set r Position \( \mathbf{r} \) u Velocity \( \mathbf{u} \) dudt Velocity rate of change \( \frac{d \mathbf{u}}{d t} \) rho Density \( \rho \) drhodt Density rate of change \( \frac{d \rho}{d t} \) m Mass \( m \) N Number of particles

◆ eos()

| __kernel void eos | ( | __global unsigned int * | mpi_iset, |

| __global float * | mpi_rho, | ||

| __global float * | mpi_p, | ||

| __constant float * | refd, | ||

| usize | N, | ||

| float | cs, | ||

| float | p0 ) |

Stiff Equation Of State (EOS) computation.

The equation of state relates the pressure and the density fields, \( p = p_0 + c_s^2 \left(\rho - \rho_0 \right) \)

- Parameters

-

mpi_iset Set of particles index. mpi_rho Density \( \rho_{n+1/2} \). mpi_p Pressure \( p_{n+1/2} \). refd Density of reference of the fluid \( \rho_0 \). N Number of particles. cs Speed of sound \( c_s \). p0 Background pressure \( p_0 \).

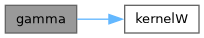

◆ gamma()

| __kernel void gamma | ( | const __global int * | imove, |

| const __global vec * | r, | ||

| const __global float * | rho, | ||

| const __global float * | m, | ||

| const __global vec * | mpi_r, | ||

| const __global float * | mpi_rho, | ||

| const __global float * | mpi_m, | ||

| __global float * | shepard, | ||

| usize | N, | ||

| LINKLIST_REMOTE_PARAMS | ) |

Shepard factor computation.

\[ \gamma(\mathbf{x}) = \int_{\Omega} W(\mathbf{y} - \mathbf{x}) \mathrm{d}\mathbf{y} \]

The shepard renormalization factor is applied for several purposes:

- To interpolate values

- To recover the consistency with the Boundary Integrals formulation

- Debugging

In the shepard factor computation the fluid extension particles are not taken into account.

- Parameters

-

imove Moving flags. - imove > 0 for regular fluid particles.

- imove = 0 for sensors.

- imove < 0 for boundary elements/particles.

r Position \( \mathbf{r} \). rho Density \( \rho \). m Mass \( m \). mpi_r Neighs position \( \mathbf{r} \). mpi_rho Neighs density \( \rho \). mpi_m Neighs mass \( m \). shepard Shepard term \( \gamma(\mathbf{x}) = \int_{\Omega} W(\mathbf{y} - \mathbf{x}) \mathrm{d}\mathbf{y} \). icell Cell where each particle is located. ihoc Head of chain for each cell (first particle found). N Number of particles. n_cells Number of cells in each direction

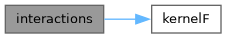

◆ interactions()

| __kernel void interactions | ( | const __global int * | imove, |

| const __global vec * | r, | ||

| const __global vec * | u, | ||

| const __global float * | rho, | ||

| const __global float * | p, | ||

| const __global vec * | mpi_r, | ||

| const __global vec * | mpi_u, | ||

| const __global float * | mpi_rho, | ||

| const __global float * | mpi_p, | ||

| const __global float * | mpi_m, | ||

| __global vec * | grad_p, | ||

| __global vec * | lap_u, | ||

| __global float * | div_u, | ||

| usize | N, | ||

| LINKLIST_REMOTE_PARAMS | ) |

Fluid particles interactions with the neighbours from other processes.

Compute the differential operators involved in the numerical scheme, taking into account just the fluid-fluid interactions with the MPI revceived.

- Parameters

-

imove Moving flags. - imove > 0 for regular fluid particles.

- imove = 0 for sensors.

- imove < 0 for boundary elements/particles.

r Position \( \mathbf{r} \). u Velocity \( \mathbf{u} \). rho Density \( \rho \). p Pressure \( p \). mpi_r Neighs position \( \mathbf{r} \). mpi_u Neighs velocity \( \mathbf{u} \). mpi_rho Neighs density \( \rho \). mpi_p Neighs pressure \( p \). mpi_m Neighs mass \( m \). grad_p Pressure gradient \( \frac{\nabla p}{rho} \). lap_u Velocity laplacian \( \frac{\Delta \mathbf{u}}{rho} \). div_u Velocity divergence \( \rho \nabla \cdot \mathbf{u} \). N Number of particles. icell Cell where each particle is located. mpi_icell Cell where each neighbour is located. mpi_ihoc Head of chain for each cell (first neighbour found). n_cells Number of cells in each direction

◆ remove()

| __kernel void remove | ( | __global int * | imove, |

| __global vec * | r, | ||

| __global vec * | u, | ||

| __global vec * | dudt, | ||

| __global float * | m, | ||

| const __global usize * | mpi_local_mask, | ||

| unsigned int | mpi_rank, | ||

| vec | domain_max, | ||

| usize | N ) |

Remove the particles sent to other processes.

- Parameters

-

imove Moving flags - imove > 0 for regular fluid particles

- imove = 0 for sensors

- imove < 0 for boundary elements/particles

r Position \( \mathbf{r} \) u Velocity \( \mathbf{u} \). dudt Velocity rate of change \( \frac{d \mathbf{u}}{d t} \). m Mass \( m \). mpi_local_mask Incoming processes mask mpi_rank The current process id N Number of particles

◆ sort()

| __kernel void sort | ( | const __global unsigned int * | mpi_iset_in, |

| __global unsigned int * | mpi_iset, | ||

| const __global vec * | mpi_r_in, | ||

| __global vec * | mpi_r, | ||

| const __global vec * | mpi_u_in, | ||

| __global vec * | mpi_u, | ||

| const __global float * | mpi_rho_in, | ||

| __global float * | mpi_rho, | ||

| const __global float * | mpi_m_in, | ||

| __global float * | mpi_m, | ||

| const __global usize * | mpi_id_sorted, | ||

| usize | N ) |

Sort all the particle variables by the cell indexes.

Due to the large number of registers consumed (21 global memory arrays are loaded), it is safer carrying out the sorting process in to stages. This is the first stage.

- Parameters

-

mpi_r_in Unsorted position \( \mathbf{r} \). mpi_r Sorted position \( \mathbf{r} \). mpi_u_in Unsorted velocity \( \mathbf{u} \). mpi_u Sorted velocity \( \mathbf{u} \). mpi_rho_in Unsorted density \( \rho \). mpi_rho Sorted density \( \rho \). mpi_m_in Unsorted mass \( m \). mpi_m Sorted mass \( m \). mpi_id_sorted Permutations list from the unsorted space to the sorted one. N Number of particles.

Generated by